Advancing earthquake and tsunami science: Tōhoku four years later

Four years after one of the largest earthquakes in recorded history devastated Japan, Stanford geophysicists Greg Beroza, Eric Dunham, and Paul Segall provide new insights that help clarify why previous assumptions about the fault had been so wrong. Using new technologies, they explain what happened during the earthquake and tsunami, and discuss ongoing research that helps society better prepare for similar events in the future.

By

Miles Traer

March 9, 2015

On a Friday afternoon in March of 2011, one of the largest earthquakes in recorded history literally shook the world. The Tōhoku earthquake moved the main island of Japan eight feet to the east, generated tsunami waves in excess of 130 feet, and damaged three nuclear reactors at the Fukushima Daichii Power Plant. Nearly 16,000 people lost their lives and the ground shaking and subsequent tsunami caused $235 billion in damages.

The event registered at a magnitude 9.0, nearly 50 times bigger than the 1906 San Francisco earthquake, and over 1,000 times bigger than the 1989 Loma Prieta earthquake. It was one of only four magnitude 9 earthquakes to have occurred in the past century. It was a massive event, and one that caught the scientific community off guard because few people thought that such a large earthquake was even possible in that part of the world.

Now, four years later, scientists have analyzed the data surrounding the Tōhoku earthquake to try to figure out why their previous assumptions were wrong. With new technologies and improved scientific analyses, researchers were able to learn valuable lessons about earthquake and tsunami generation and their associated risks. As urban populations around the world continue to grow in seismically active areas, understanding the science of earthquakes and tsunamis remains vital for hazards assessment. New insights and technological advances provide a better understanding of what happened in March of 2011 and inform how to better prepare for similar events in the future.

Devastation caused by the Tōhoku earthquake and resulting tsunami.

A patchwork fault and surprising discoveries

Japan is no stranger to large earthquakes. Even a quick Wikipedia search reveals many magnitude 6, 7, and 8 events over the past hundred years. Japan’s modern network of seismometers and GPS stations offers information about earthquakes in unprecedented detail. But a magnitude 9 event, thirty-two times more powerful than a magnitude 8 event, didn’t seem possible because of the way researchers thought the fault behaved.

Generally speaking, faults operate in two different ways: they can lock and build up stress over time, or they can creep and constantly relieve stress. Broadly, creeping faults are safe because the stress simply doesn’t accumulate. Locked faults are the problem.

“For big earthquakes to occur a big fault must be present. And there is certainly a big fault offshore Japan,” says Paul Segall, professor of Geophysics at Stanford. “But most people thought that the Tōhoku fault was compartmentalized into locked and creeping patches that couldn’t break all together. This idea turned out to be tragically wrong.”

Shortly following the earthquake, Segall and his students began pouring over the GPS data and constructing a new model of the fault’s behavior. They also analyzed the region’s earthquake history and discovered that, through sophisticated kinematic models, the data allowed for much larger sections of the fault to be locked and building up stress over time than previously thought.

Shortly following the earthquake, Segall and his students began pouring over the GPS data and constructing a new model of the fault’s behavior. They also analyzed the region’s earthquake history and discovered that, through sophisticated kinematic models, the data allowed for much larger sections of the fault to be locked and building up stress over time than previously thought.

“In going through all of the data, we found that it was actually pretty easy to get magnitude 9 earthquakes to build up every 500 to 1,000 years or so with that much locked fault.”

During the years-long investigation, Segall and graduate student Andreas Mavrommatis made a surprising discovery with potential implications for how earthquakes are generated. Prior to the earthquake, geophysicists thought that strain would build across the fault at a constant rate as the Pacific plate subducted beneath Japan, with the deformation recorded by the network of GPS stations. The constant motion along the creeping sections of the fault would also cause small repeating earthquakes to occur over many years. But when they plotted the GPS data and analyzed the small repeating earthquakes, it didn’t look at all constant.

“That meant the fault was getting less locked from 1996 to 2010 - what geophysicists call uncoupling. Parts of the fault got more slippery as time went on,” said Segall. The analysis suggests that something unusual was going on under Tōhoku leading up the 2011 earthquake.

“We don’t think that the accelerating slip was nucleation (initial slip) of the earthquake itself, because of the spatial and temporal scales involved,” Segall said. “However, there is a question as to whether the non-steady slip somehow impacted the occurrence of the earthquake. It’s an open question, and one we’re still dealing with today.”

A crack in the world

As Segall and his students were questioning the previous model of the fault responsible for the Tōhoku earthquake, Stanford geophysicist Greg Beroza and his students were trying to image the earthquake itself.

The Wayne Loel Professor in Earth, Energy & Environmental Sciences, Beroza has been developing new technologies to create virtual earthquakes to simulate the intensity and variability of ground shaking, with particular interest paid to densely populated urban areas. When the Tōhoku earthquake hit, Beroza, like Segall, questioned why it was so big.

The Wayne Loel Professor in Earth, Energy & Environmental Sciences, Beroza has been developing new technologies to create virtual earthquakes to simulate the intensity and variability of ground shaking, with particular interest paid to densely populated urban areas. When the Tōhoku earthquake hit, Beroza, like Segall, questioned why it was so big.

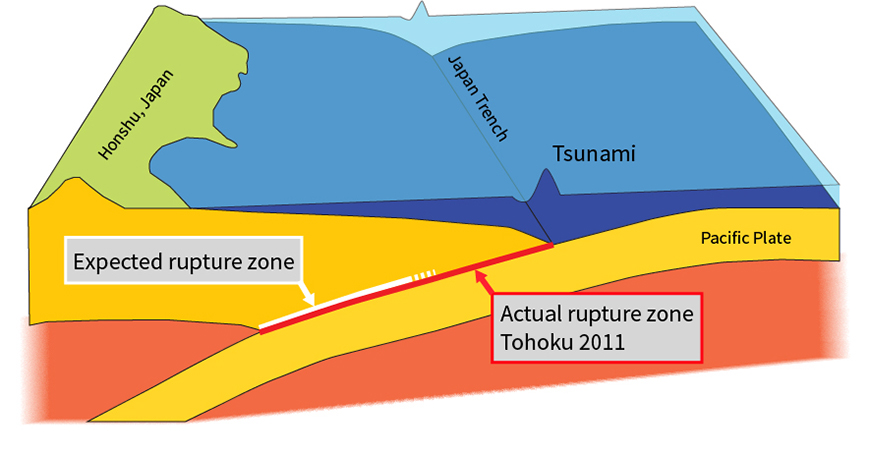

“In addition to the patch model (sometimes called the Seismic Asperity Model), many people thought that any rupture on the fault couldn’t break all the way to the surface trench at the seafloor,” Beroza said. “A rupture all the way to the trench is much more dangerous, more capable of creating a large tsunami.”

Within weeks of the earthquake, Beroza and graduate student Annemarie Baltay (now a postdoctoral fellow at the United States Geological Survey in Menlo Park) contacted Satoshi Ide, professor of seismology at the University of Tokyo. Together, they quickly established a research plan to image the slip on the fault and see how far the rupture had propagated.

Using ground motion data recorded very far from the earthquake, they discovered that the earthquake demonstrated two different modes of rupture, beginning with a deep energetic rupture that later triggered a shallow rupture with slower slip rates nearer the surface.

“Using the imaging technique, our rupture model showed slip all the way to the trench,” Beroza said. “This was somewhat surprising because many people thought that the fault couldn’t rupture like this through the loose sediments in the trench.”

Schematic representation of the fault zone that generated the Tōhoku earthquake. The rupture zone expected prior to the 2011 earthquake is shown as the bold white line while the actual rupture zone of the 2011 earthquake is shown as the bold red line (by Miles Traer).

Eric Dunham, assistant professor of Geophysics at Stanford, brought his understanding of the mechanics and physics of earthquakes to simulate the fault rupture using sophisticated computer models.

The fault drops down underneath Japan, but it does so gradually. “Seismic waves leave the fault, come up to the seafloor, and get reflected back down, getting trapped in this little wedge of material called the frontal prism. The trapped waves amplify and drive further slip on the fault, allowing the rupture to propagate to the surface,” said Dunham.

The work from Dunham, Beroza, Baltay, and Ide provided some of the earliest explanations for why the earthquake had been so massive, shaken the ground so violently, and generated such a large tsunami.

The tsunami

Despite the violent shaking caused as the fault ruptured beneath Japan, most people remember the tsunami that followed. News sites across the world replayed images of the 130-foot waves crashing against the coast. Scientists including Eric Dunham wanted to know why the tsunami was so large.

During the earthquake, the Pacific plate slid about half the length of a football field beneath Japan. The slip on the fault caused the seafloor to move up and down, pushing the ocean with it and generating a tsunami. One of the first questions to cross Dunham’s mind was how to determine the size of the tsunami before it made landfall.

During the earthquake, the Pacific plate slid about half the length of a football field beneath Japan. The slip on the fault caused the seafloor to move up and down, pushing the ocean with it and generating a tsunami. One of the first questions to cross Dunham’s mind was how to determine the size of the tsunami before it made landfall.

Seismic waves and tsunami waves travel at different speeds. The rupture broke through to the surface nearly 100 miles from the Japanese mainland. Even at that distance, the seismic waves took just around a minute to reach the Tōhoku region. The tsunami waves took 15 to 20 minutes.

Dunham began creating computer simulations of the earthquake and resulting tsunami. “In running the simulations, we saw that the earthquake generates extremely fast, huge-amplitude waves – a mixture of seismic waves in the solid Earth and sound waves in the ocean,” Dunham explained. “Those waves turn out to have amplitudes that are very much related to what was happening 100 miles offshore, the very region where we desperately need information.”

With a tsunami early warning system in mind, Dunham continues to work on generating sophisticated computer models to help predict the size of tsunamis generated by large subduction zone earthquakes.

Japan is currently deploying a large number of underwater pressure sensors to measure tsunami waves directly. “But there are other signals, like the ocean sound waves and the seismic waves generated by the earthquake and those will arrive much faster,” Dunham said. “If we can come up with the correct model, we might be able to use the faster moving signals to give people a warning about the size of the incoming tsunami.”

While long-range tsunami warnings are already quite good, local tsunami warning systems need work to provide longer preparation times and more accurate estimates on tsunami wave heights. As Dunham explained, “The seismic waves give us some potential to predict the size of the tsunami waves almost immediately after the earthquake.”

Remaining questions

Four years following the Tōhoku earthquake, scientists still have questions about rupture dynamics, fault mechanics, rock physics, and tsunami generation. But the events surrounding Tōhoku spread out more broadly across the world.

“Probably the biggest message overall for both scientists and for disaster preparedness people is don’t get locked into one paradigm,” Paul Segall offered. “In Tōhoku, many people limited how they thought about the fault in ways that were not required by the data. If we are going to be better prepared for events like this, the best thing we can do is consider what’s possible given the available data.”

Greg Beroza continued, “The biggest remaining question is what does this mean for other subduction zones, like those in the northwestern US, or Chile, or Mexico? Do we have to reconsider what these faults are capable of?”

Using new technologies, scientists such as Beroza, Segall, Dunham, Ide, Baltay, Mavrommatis, and others continue to probe these questions and provide valuable insights into earthquake dynamics and prepare for earthquakes that will inevitably follow.

Greg Beroza said that Tōhoku serves as a reminder. “Even in a place like Japan with great records going back many years, we can still dramatically underestimate what the Earth is capable of.”